More often than not, the primary goal of any application is the collection, processing and showing of data. Most coders focus heavily on the “code” part of any application, yet no single application has any value without data. This data obviously needs to live somewhere - the database. For this reason, most companies have entire departments that only provide database services, such as provisioning databases, setup tables, process db operations, perform db updates, maintain the data, etc.

This is not usually seen as the sexiest of parts of development, but by no means should it therefore be neglected. Regular db backups should be made (not only for audit reasons), as retrieving data in production is nearly impossible, as aside from direct consequences, your application (or company) could suffer reputational losses that are too hard to recover from.

This article will cover how to set up a logical database schema through separate sql scripts, to ensure that you have the same database schemas across all environments.

Liquibase

Data lifecycle

You may have noticed already, that, so far, whenever the application has been shut down and restarted, all the data has been lost. For the little functionality and data setup that we have so far, this has not been an issue - after all, the application has only been running locally with only a single table.

For actually functional applications however, this is obviously not acceptable. The data that is entered once should remain in place, and only be changed when it’s been manipulated by the user, or by some admin activity.

What is liquibase?

Liquibase is an open-source framework which helps applications and developers maintain very close control over the database schemas. A schema is the description of how the entities in the databases look. This includes tables, indices, aliases, users - pretty much everything you could find in a database. It allows you to track, version and maintain consistency across environments for your schemas.

The community for liquibase is rather large, as it is the most used database schema deployment framework out there for JVM applications. The main competitor is flyway, but the offered functionality is mostly the same.

Why do we need liquibase (or flyway)

Now you may say - “Hey, I know databases are important and all, but I’ve always been doing just fine managing them by myself.”

You may be right in this approach, especially for small projects, however, there are some major advantages in letting third party tools handle the db migrations:

- Version-controlled database schema changes -> no need to remember whether you already applied the script or not

- Automatic deployment of changes on startup -> no need to order db schema changes prior to a deployment, or have applications fail to start if the script has not been applied

- Branching and merging for teams -> With versioned changes, no two developers will overwrite each other’s changes

- Easy to implement into automated pipelines -> obviously wouldn’t happen for manual changes

- Easy to rollback

- Scripts live in application -> No need for separate storage in files that cannot be found again if disaster strikes

For the reasons above, I believe it makes absolute sense to handle your database changes with a tool such as liquibase. The tool will also fail quickly if something incorrect has happened, so you will be immediately notified in case of errors. With an additional integration of an application with a real DB in your pipeline, the incorrect schema changes will even already be verified before they make it to the main branch, and all subsequent merges will immediately have the same codebase that works correctly with the DB.

How does liquibase work?

At the very core, liquibase uses a changelog which explicitly lists database changes, in order. It keeps track of previously run changes, so they would not be executed again upon redeployment. Also, this ensures the immutability of run changes. You cannot simply change some values of a script that has run, as this would not make sense. While this can be a source of frustration, it is actually extremely useful.

The changesets that have run are being tracked in the DATABASECHANGELOG, where you will find information on who has run the

changes, their timestamps, md5sum, etc. If you remove an entry from this table, that script would run again upon the next deployment,

but keep in mind that the changes from that script would still be there (unless they’re rolled back, of course).

Setup in application

Dependency

All this being said, let’s integrate liquibase into the application. The first part we need is the dependencies in the pom.xml:

<!-- https://mvnrepository.com/artifact/org.liquibase/liquibase-core -->

<dependency>

<groupId>org.liquibase</groupId>

<artifactId>liquibase-core</artifactId>

</dependency>What, no version definition required? Well, liquibase is so well-known that its version is even already defined in the spring-boot-starter-parent dependency management (in fairness, flyway is there also). So, while you can choose to use the latest version, it’s best to use the version suggested by the spring organisation.

Adding our first change

Liquibase offers multiple ways of creating changesets: SQL, XML, JSON or YAML. I would usually prefer the SQL way, as this is generally the easiest to debug in case something goes wrong - you can simply copy the script and try to execute it manually on the DB. If you use IntelliJ together with the Jpa Buddy plugin, you can let IntelliJ generate all the changesets for you, based on the difference of what is in your entities and what Jpa would generate. This will include a lot of items one might usually forget, so I will go with this option.

However, feel free to use any method you prefer.

In case you do use changelogs with plain SQL:

- Every SQL changeset must start with

--liquibase formatted sql - Additional information can be provided like so:

--changeset author:id attribute1:value1 attribute2:value2 [...]

Also, since I do not want to have one enormous changelog with all the changes in them, I will create one changelog which will then

include all the changes in separate files, as I believe this to be easier maintenance. If you want to specify which files to include,

and not simply include all, you can do so by replacing the includeAll below with multiple include items. Due to the includeAll

that I use, I have set the root just one folder higher up, to avoid an endless loop.

Let’s first create the root file db/1-0-0-changelog-root.xml:

<?xml version="1.0" encoding="UTF-8"?>

<databaseChangeLog

xmlns="http://www.liquibase.org/xml/ns/dbchangelog"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.liquibase.org/xml/ns/dbchangelog

http://www.liquibase.org/xml/ns/dbchangelog/dbchangelog-4.3.xsd">

<includeAll path="com/example/changelogs/"/>

</databaseChangeLog>Next, we notify the application that we want to use liquibase with the following addition in the application.yaml:

spring:

liquibase:

change-log: db/1-0-0-changelog-root.xmlNow, we can get to the actual versioning of the table. You may have noticed the Major-Minor-Path syntax I’m using - this is entirely optional though. So let’s add the first SQL creation with XML:

<createTable tableName="account">

<column name="id"

type="UUID">

<constraints nullable="false"

primaryKey="true"

primaryKeyName="pk_account"/>

</column>

<column name="name"

type="VARCHAR(255)">

<constraints nullable="false"/>

</column>

<column name="amount"

type="DOUBLE">

<constraints nullable="false"/>

</column>

<column name="currency"

type="VARCHAR(255)">

<constraints nullable="false"/>

</column>

<column name="description"

type="VARCHAR(255)"/>

<column name="added_on"

type="DATE">

<constraints nullable="false"/>

</column>

</createTable>Now, if you run this, everything will seem fine. You kill the app, restart it, and try to see whether you have your data still… But oh no, it’s actually still gone! What happened?

Well, we are actually still using the h2, in-memory database. As the name states, this will remove the DB entirely upon shutdown of the application.

Therefore, we will soon create a second yaml for an integrated local environment. First though,

we will change the application.yaml to not include liquibase with the following db setup:

spring:

datasource:

url: jdbc:h2:mem:testdb

liquibase:

enabled: false

server:

error:

include-message: alwaysAnd now, the application-integrated-local.yaml, which will use the properties if the application runs with the

“integrated-local” profile:

spring:

datasource:

url: jdbc:postgresql://localhost/moneymanagement?useSSL=false&allowPublicKeyRetrieval=true

username: postgres

password: changeme

driver-class-name: org.postgresql.Driver

liquibase:

change-log: db/1-0-0-changelog-root.xml

enabled: truePlease note that the server.error.include-message property does not need to be defined again, as it will take the values

from the application.yaml by default.

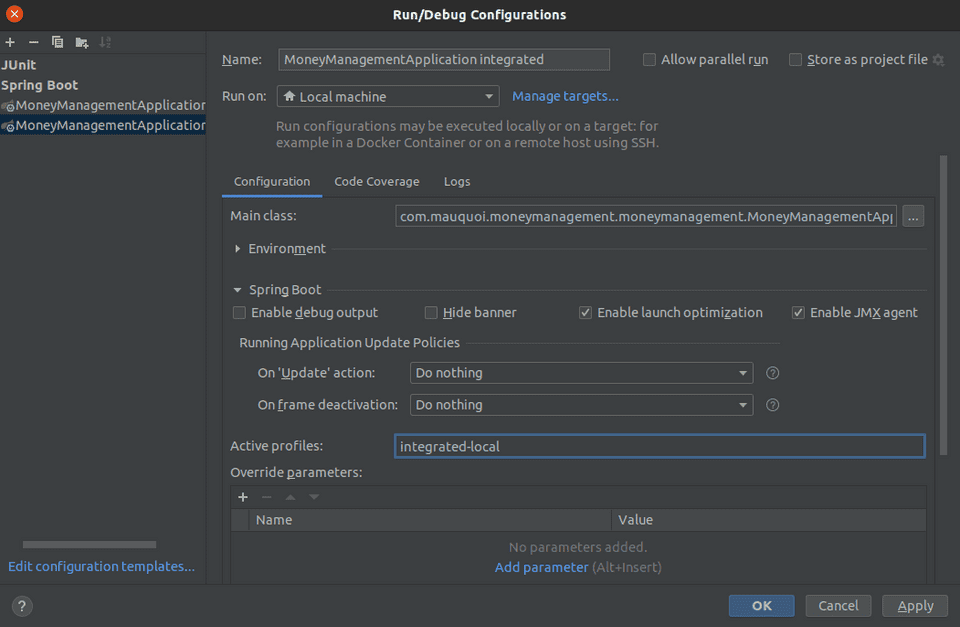

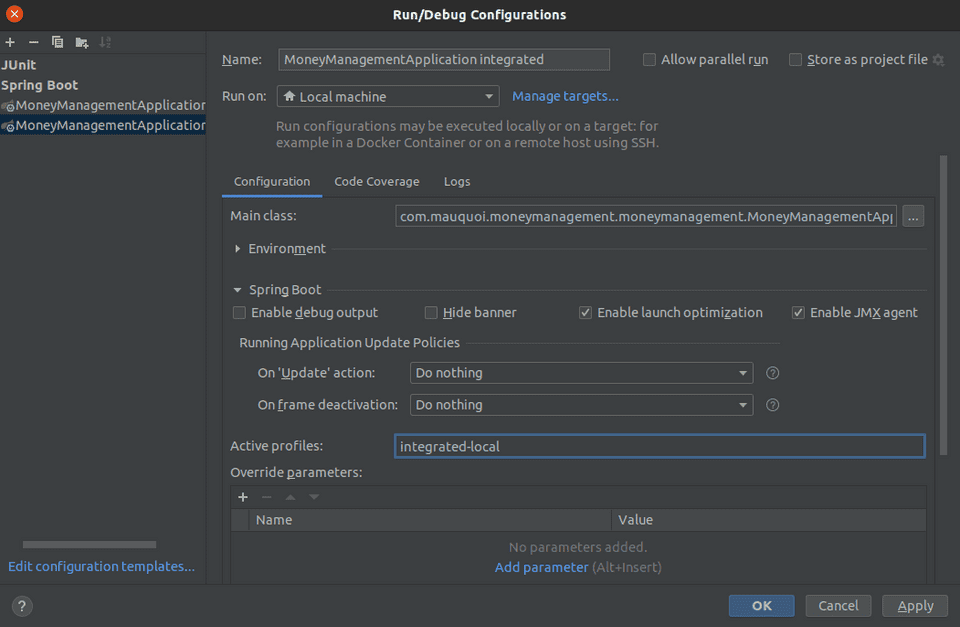

Ideally, you now create a second Run Configuration which looks like below:

Regarding the datasource - the easiest way to test DB items, I will spin up a postgres Docker image. To enable postgres

in the application, add the following dependency in your pom.xml:

<dependency>

<groupId>org.postgresql</groupId>

<artifactId>postgresql</artifactId>

<scope>runtime</scope>

</dependency>Very well, now everything is set up, and we simply need to add that DB locally in order to test.

Docker verification

For the testing, we need an actual database on our local machines now. Installing postgres is an option, of course, but it will take some setup, time, storage space, etc. It’s much easier to spin up a docker image that we can kill again when it’s no longer required. The data will still be stored, even if the image is killed (unless the tables are dropped or the volume cleared…), and will just be waiting for you when the image is started again.

I will now assume that you have Docker already installed on your machine. In order to start a postgres image, run the following

command in your terminal:

docker run --name moneymanagement-psql -p 5432:5432 -e POSTGRES_DB=moneymanagement -e POSTGRES_PASSWORD=changeme -v /data:/var/lib/postgresql/data -d postgres:12.4-alpine

The image will create a container called moneymanagement-psql, with a database called moneymanagement, and with credentials user = postgres, password = changeme.

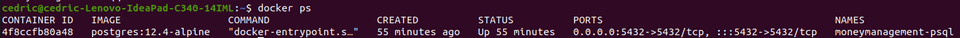

You can verify the image is running by typing docker ps, and you’ll see something along the lines of

So now, the database is set up, really quickly. So, let’s fire up our application with the new spring profile!

You will now see some log statements that show the liquibase has indeed run (feel free to run the application with a different profile, and you won’t see those statements).

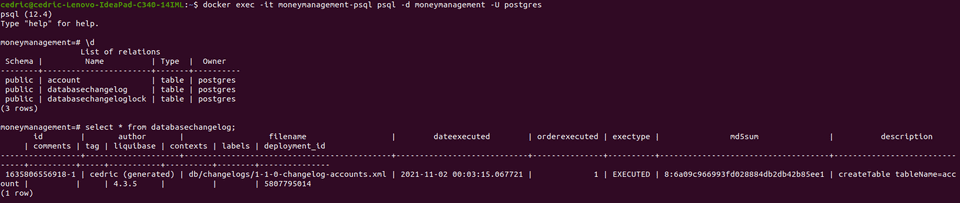

In order to verify that the tables have been created, you can run the following commands:

docker exec -it moneymanagement-psql psql -d moneymanagement -U postgres-> shell into the docker image with the correct creds-

\d-> show the existing relations- verify that the

DATABASECHANGELOGtable exists - also verify that the

DATABASECHANGELOGtable exists

- verify that the

select * from databasechangelog;-> see the changesets that have been appliedselect count(*) from account;-> 0, as no accounts currently exist

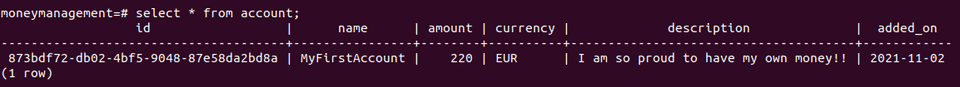

Next, we can use our Postman suite to create an account, and verify on the DB that it has been created,

using select * from account;. You should now be able to see the values you’ve entered.

Now, please kill the application, and run the previous command again - the data is still there!

Finally, you can restart your application, copy the ID from the database, and perform the GET request using it. If you were using the h2 database, you would now run into a 404 error. However, since you are simply connecting to the same database, where the data still lives, the account will be returned nicely.

Congratulations, you now have all the required tools to develop your own data layer!

As a small check, since your database is still very small at this stage, feel free to kill your application again, and change

the liquibase changeset (for example, rename the account column to acc), and start the application once more. It will now simply

not start, and instead you’ll be greeted with the following stacktrace:

You can now see that changes, once they’re in, are set in stone. They can be changed with additional changesets, but that first changeset is fully final, and you’ll have great consistency among your full development process! Of course, quickly undo this breaking change now, otherwise you’ll forget you’ve made it! ;)

Feel free to experiment some more! If you break everything, simply exec back into your container, drop all the tables,

and start the application again - all tables will be created as if for the first time! Happy developing!