Today’s article will be a bit shorter, as the holiday period is coming up. :)

If you’ve been following along on my blog, you’ll know that I am a big fan of being lazy. One part of that includes automation of testing, another means not having to worry about integration.

There are a lot of options to automatically hook up any changes in your codebase to then perform many operations on them. They can include simply building the project, a static code analysis, running integration tests, automated deployments, etc.

In this article, I will go over how to start pipelines with Github actions. The pipeline will not be in its final stage, but I will cover how to set up the pipelines, how to build and dockerize the application in it, and run integration tests against that docker image.

Also, the article will only cover the integration pipeline for the Kotlin backend project.

What is Github Actions

Github actions are an automation workflow tool that will help you create a CI/CD pipeline for software, defined in different steps in a yaml file. It is comparable to Jenkins, Gitlad, TravisCI, and so on. One of the major advantages that you receive from Github Actions is that… it is free!

On top of being free, even for private repositories, Github has a very large community (though perhaps not one as passionate as Gitlad, but still), and it is actively being worked on by Microsoft. This means that you will find a huge amount of information for whatever issues you may be facing, as the community is extremely helpful.

Since Github may also already be the go-to platform for your Git repositories, you also won’t even need any additional tools, as you have immediate integration guaranteed, thus decreasing the amount of services required to sign up for. The results of the actions are right on the same page you will have your bugs, your settings, and of course, your code.

Finally, what is very valuable to me, is that you do not need to set up your own runner/worker that the pipeline will run on. You can simply let it run on the servers that Microsoft has dedicated to these needs. You don’t need to provision servers, ensure your own laptop is turned on, or anything like it!

Setting up pipelines

First of, we need to determine what we’d like to do on separate actions, as we can define different operations depending on what we’re trying to do. For instance, if we’re simply pushing to a feature branch, we might want to run tests, but not to deploy the resulting artifact into production.

Here are the different steps I will do for now:

-

Pull request creation

- Build the project

- Run integration tests

-

Merge to main

- Build the project

- Run SonarQube analysis (in future post)

- Run integration tests

- Push docker image to Dockerhub

These actions will of course be extended in the future.

For now, I’ll assume you already have your Github project. So, to now create actions, we need to define the pipelines in our project.

The first step is to create the workflows in your project. In order to do so, create the folders .github/workflows in the root of your

project.

Let’s start with the first of the two steps, the simple running of the build. The integration tests with Postman/Newman will follow in the next chapter.

In the workflows folder, let’s create a file called feature-build.yaml

# This is a basic workflow to help you get started with Actions

name: Pull request to main

# Controls when the action will run. Triggers the workflow on push or pull request

# events but only for the main branch

on:

pull_request:

branches: [ main ]

# A workflow run is made up of one or more jobs that can run sequentially or in parallel

jobs:

# This workflow contains a job called "build" which is a maven install

build:

# The type of runner that the job will run on

runs-on: ubuntu-latest

# Steps represent a sequence of tasks that will be executed as part of the job

steps:

# Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

- uses: actions/checkout@v2

- uses: actions/cache@v1

with:

path: ~/.m2/repository

key: ${{ runner.os }}-maven-jdk11-${{ hashFiles('**/pom.xml') }}

restore-keys: |

${{ runner.os }}-maven-jdk11-

# Runs a single command using the runners shell

- name: Setup jdk 11

uses: actions/setup-java@v1

with:

java-version: 11

# Runs a set of commands using the runners shell

- name: Build

run: |

echo "Running Build job"

mvn clean install -Dspring.profiles.active=testYou may notice that I’ve put more comments than I usually do. :)

Let’s now break it down to the smaller pieces:

- Define that the pipeline runs only on PRs to main:

- Set up the environment for the pipeline, where we do multiple things:

- Define the pipeline will run on ubuntu

- Checkout the code in a Github Workspace (checkout action)

- Use caching for better performance

- Run the steps - there aren't many yet. The first step sets up the Java environment (as Kotlin is a JVM language), and the second will run the build with the test profile

on:

pull_request:

branches: [ main ]jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- uses: actions/cache@v1

with:

path: ~/.m2/repository

key: ${{ runner.os }}-maven-jdk11-${{ hashFiles('**/pom.xml') }}

restore-keys: |

${{ runner.os }}-maven-jdk11- - name: Setup jdk 11

uses: actions/setup-java@v1

with:

java-version: 11

- name: Build

run: |

echo "Running Build job"

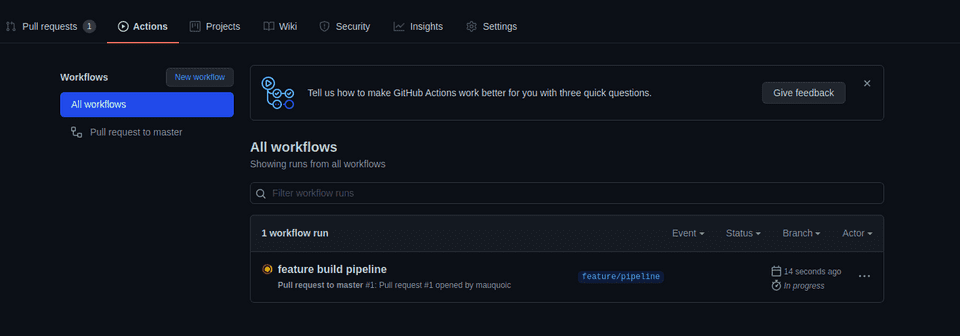

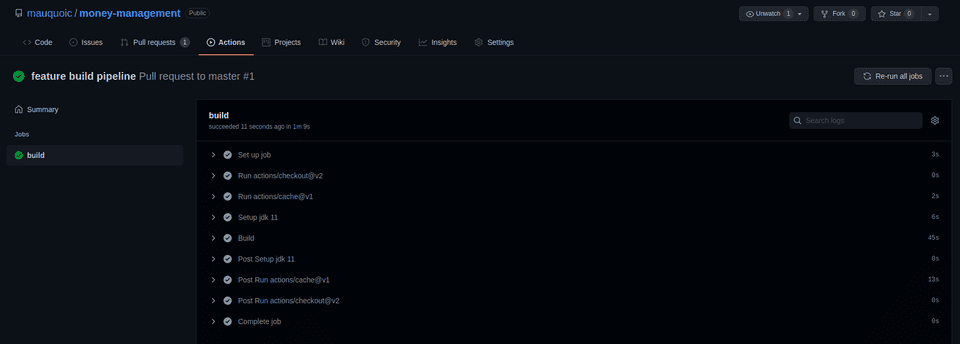

mvn clean install -Dspring.profiles.active=testNow, when you push this file, nothing will happen (you can verify in the Actions tab). However, when you then create a PR, the action will immediately take effect.

If you then click on the running action, and then on the build, you’ll see some more details (and depending on how fast you are, it may even already have completed, as is the case here).

Wonderful! Now that this pipeline has run successfully, you can feel confident about merging your code!

Adding integration tests

This is already not too bad - we now have the confidence that the build will not be broken, and we don’t need to trust that lazy collaborators have gone through the trouble of running all the tests.

However, when we are on the main branch, we may want some additional steps to be taken. As mentioned before, it wouldn’t make much sense to upload the result of every single pipeline in Dockerhub for example - when we merge to the main branch though, we should ideally now have something deployable, with a new feature for example.

Also, the integration tests will be defined here. This is enirely up to you however, feel free to add those steps in the feature branch pipeline already.

To create the pipeline for the main branch pushes or merges, add main-build.yaml to the same folder as before:

# This is a basic workflow to help you get started with Actions

name: Push on main

# Controls when the action will run. Triggers the workflow on push or pull request

# events but only for the main branch

on:

push:

branches: [ main ]

# A workflow run is made up of one or more jobs that can run sequentially or in parallel

jobs:

# This workflow contains a job called "build" which is a maven install

build:

# The type of runner that the job will run on

runs-on: ubuntu-latest

# Steps represent a sequence of tasks that will be executed as part of the job

steps:

# Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

- uses: actions/checkout@v2

- uses: actions/cache@v1

with:

path: ~/.m2/repository

key: ${{ runner.os }}-maven-jdk11-${{ hashFiles('**/pom.xml') }}

restore-keys: |

${{ runner.os }}-maven-jdk11-

# Runs a single command using the runners shell

- name: Setup jdk 11

uses: actions/setup-java@v1

with:

java-version: 11

# Runs a set of commands using the runners shell

- name: Maven Build

run: |

echo "Running Build job"

mvn clean install -Dspring.profiles.active=test

# Runs the sonar analysis

# - name: Run SonarQube Analysis

# run: mvn sonar:sonar -Dsonar.projectKey=${{ secrets.SONAR_PROJECT_KEY }} -Dsonar.host.url=${{ secrets.SONARQUBE_HOST }}

# -Dsonar.login=${{ secrets.SONARQUBE_TOKEN }}

# Build Image

- name: Build image

run: docker build -t mauquoic/money-management ./

# Integration tests

- name: Run integration tests

run: |

echo "---------- Running image ----------"

docker run --name money-management \

-p 8080:8080 \

-e spring.profiles.active=test \

-d \

mauquoic/money-management

echo "---------- Image started ----------"

echo "---------- Running postman tests ----------"

newman run src/test/resources/application-tests.json

# mvn spring-boot:run -Dspring-boot.run.profiles=test

# Image upload

- name: Upload image to dockerhub

run: |

echo "---------- Logging in to docker ----------"

docker login --username=${{ secrets.DOCKER_USERNAME }} --password=${{ secrets.DOCKER_PASSWORD }}

echo "---------- Pushing image ----------"

docker push mauquoic/money-managementThe first couple of steps are exactly the same as for the previous pipeline. However, it does make sense to ensure that everything still plays along nicely.

So, again, let’s break down the additional steps:

- Commented out SonarQube setup. This will be done in some other article. The running of the command is simple enough, but I don't want to set up the SonarQube on my server yet...

- Build the docker image, with the name and the path to the Dockerfile

- Run the integration tests. Here we use a multiline command, as there are several actions to be done.

- Spin up the image

- Run the integration tests using Newman. In case you're wondering what Newman is - it's simply Postman, but without the nice UI. This means that you can export the tests and run them against the docker image that's spun up! Pretty sweet, right?

- Upload the image to the Dockerhub. This would only be done if all the past steps were successful, of course, so we know that we now have a functional image that we can pull in other places!

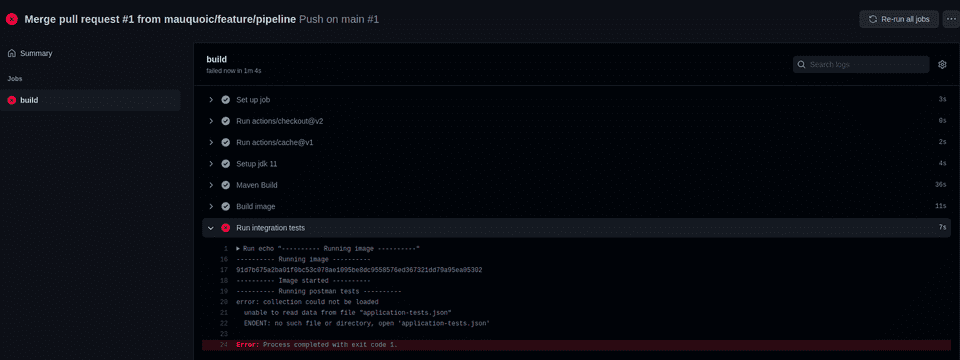

Now, I was too excited and wanted to try out the pipeline.Of course, attentive readers will notice that there are some parts I’ve forgotten to do, so the pipeline failed!

It’s fairly logical that the pipeline failed - I’ve forgotten the following steps:

- Adding the test suite for the integration tests

- Defining those secrets for the upload to the dockerhub (it didn’t get to those, but it’s still something that has been missed)

So now, let’s fix those issues!

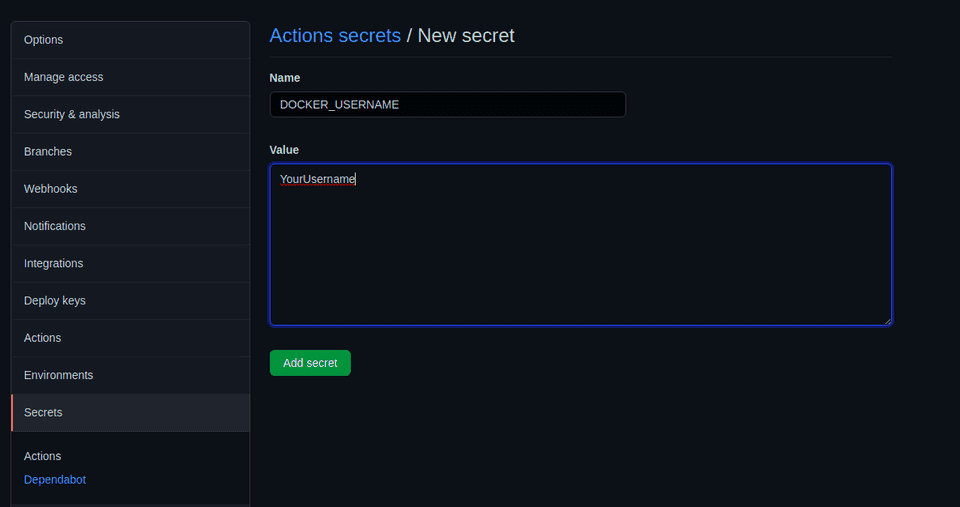

Define the secrets

First, we want to define the secrets. It may be backwards, but those do not need to be pushed, whereas the test suites do, so if we were to tackle the tests first, the pipeline would fail once more.

In order to add the secrets, we need to navigate to the navigate to the Settings tab, then choose Secrets, and finally click on Add Repository Secret. It’s very important to note that once the secret is stored, you will NOT be able to see that value again, so make sure that you remember it or have it stored somewhere safe!

We now need to store the secrets DOCKER_USERNAME and DOCKER_PASSWORD.

Add the integration tests

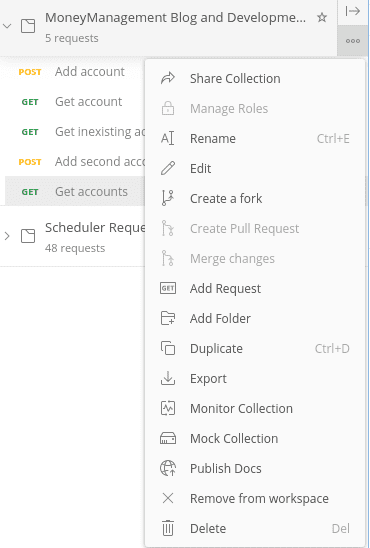

Very well, now we need to export the integration tests. In order to do this, we need to open Postman, choose the collection, click on the three dots, and select Export.

Next, once this is exported, copy that json that has just been exported into the folder src/test/resources/.

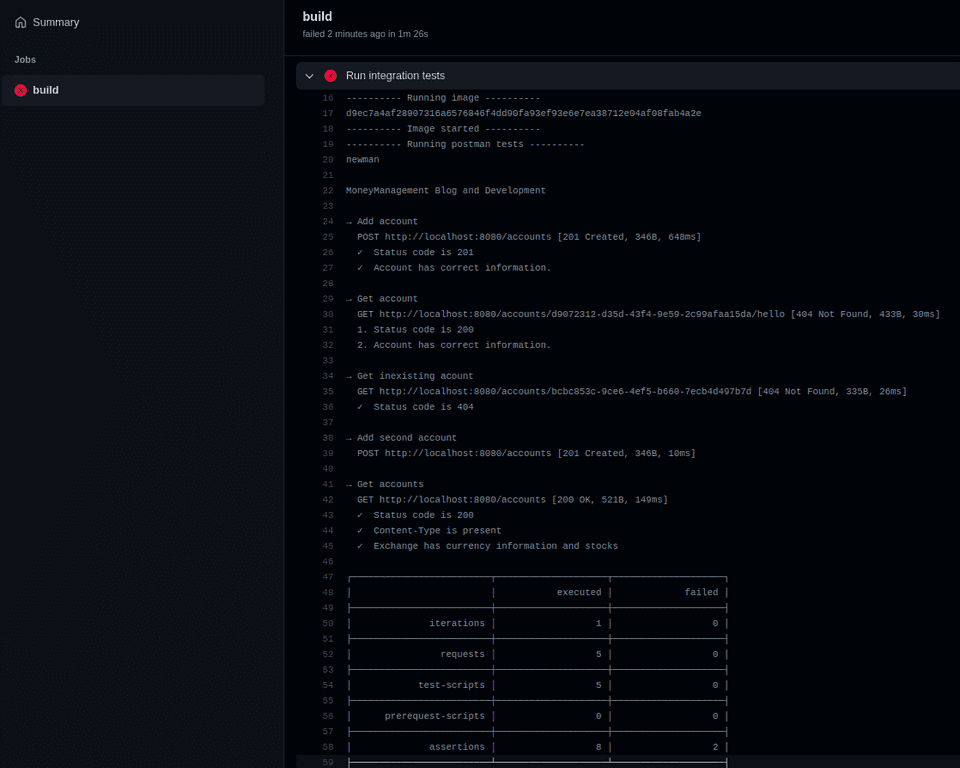

I have inserted an error on purpose, just to verify that the pipeline will indeed fail when some tests are unsuccessful.

After pushing this json, the pipeline does indeed fail again, but, as you can see, it now fails on the test where I’ve manipulated the URL (the second test). All the succeeding assertions have little checkmarks next to them, whereas the failed ones are numbered. It also shows a nice summary at the end, where it states that two assertions have failed.

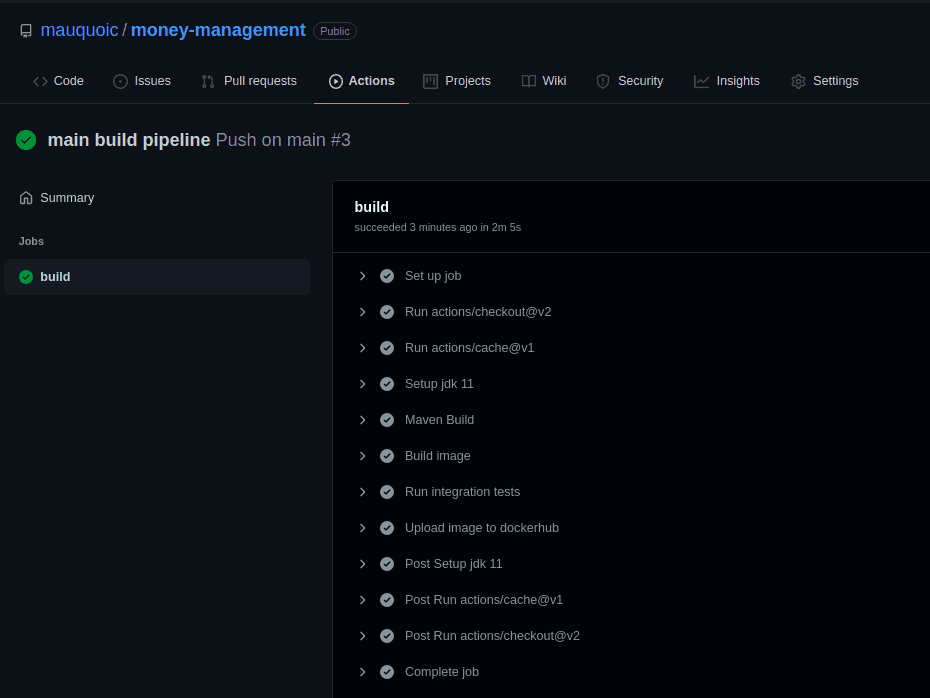

Now, if we fix that test, export the new collection, and then push those changes, all checks will pass, and the pipeline ends with that nice green color!

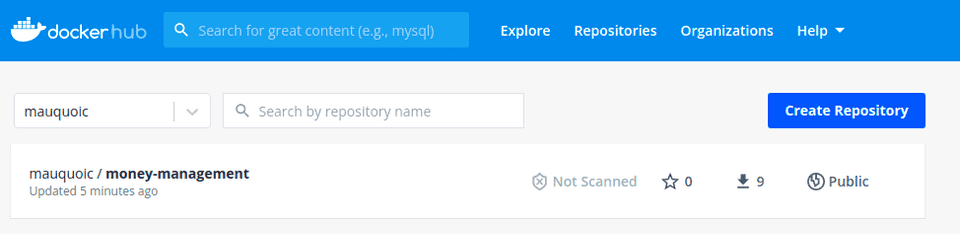

This looks great, but we want to have one final verification, and see whether the image was indeed uploaded to the Dockerhub. After logging in, I see that, indeed, the image is there, ready for usage!

This means that technically, new pipeline steps could already deploy that image to your test environment, for example. And all subsequent pushes on master will undergo the exact same steps, so without any manual interaction, you will have the newest image at all times! How awesome is that?

What do you think? Which additional steps would you add to the pipeline? Or which additional operations warrant their own pipelines? The only limit is your imagination!